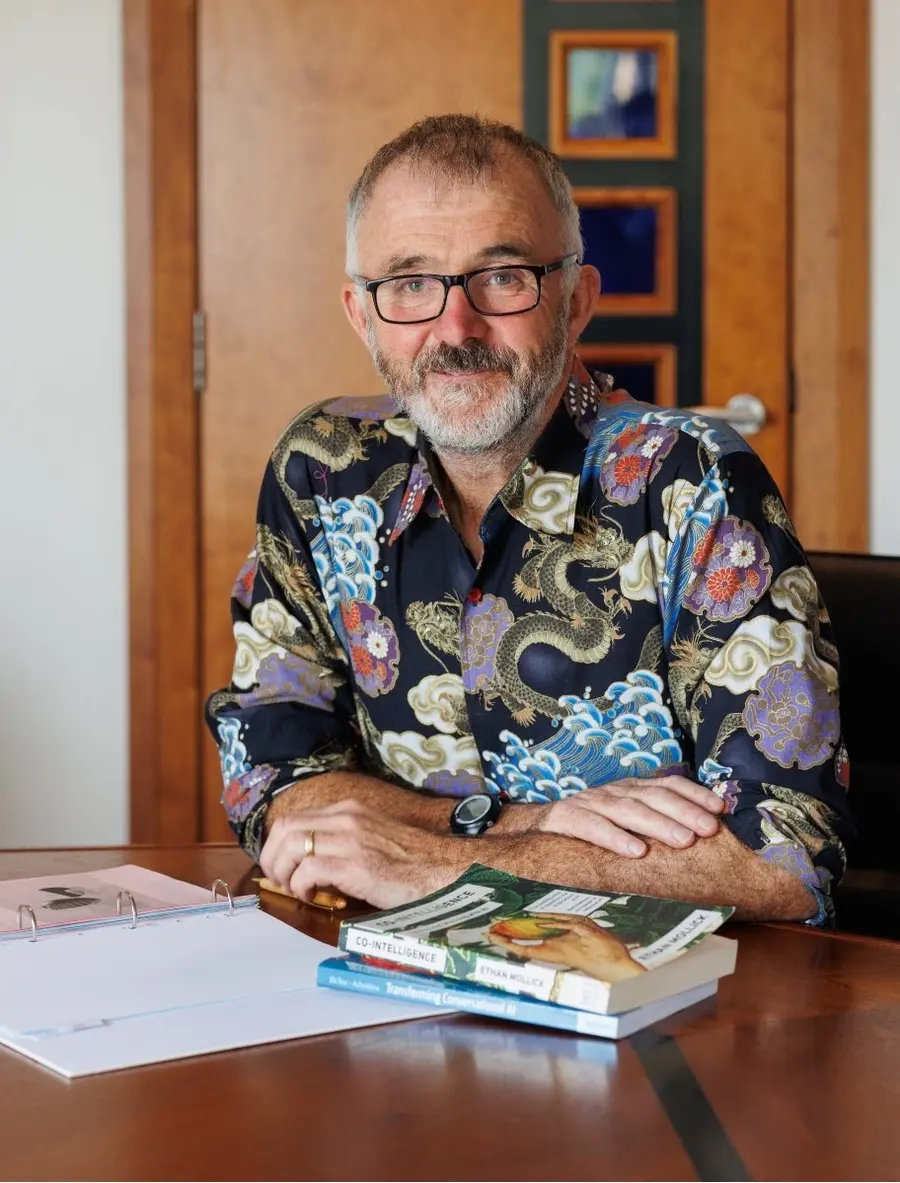

Barry Phillips (CEO) BEM founded Legal Island in 1998. He is a qualified barrister, trainer, coach and meditator and a regular speaker both here and abroad. He also volunteers as mentor to aspiring law students on the Migrant Leaders Programme.

Barry has trained hundreds of HR Professionals on how to use GenAI in the workplace and is author of the book “ChatGPT in HR – A Practical Guide for Employers and HR Professionals”

Barry is an Ironman and lists Russian language and wild camping as his favourite pastimes

This week Barry Phillips asks “Does AI have a reputation in the workplace that requires a complete rethink and rebrand?”

Transcript:

Hello Humans!

Welcome to the weekly podcast that aims to summarise an important development in AI relevant to the world of HR in five minutes or less. My name is Barry Phillips.

This week we’re asking this. Are we heading towards a view of AI in the workplace that we probably already have about social media: namely, overall it actually does more harm than good?

AI’s reputation in the workplace right now is not great. In fact, lets be honest it’s pretty poor. It’ll take our jobs. It will create slop as a standard output. Oh and it might end the world as anyone knows it.

There are four points I think we need to consider here.

Point 1. Stuff that really is about AI

Let's be clear: some criticisms of AI are completely legitimate.

Copyright concerns top the list. Artists and creators understandably bristle when their work gets fed into systems that generate new content without compensation or attribution. It's like having an unpaid intern who never sleeps and never credits sources.

Employment anxiety runs deep too. When companies announce AI adoption with reassuring phrases like "augmenting human capabilities," employees hear something closer to "your desk might be empty next quarter." Decades of corporate doublespeak have earned that skepticism.

It certainly doesn't inspire confidence when industry executives struggle to articulate a vision where humans remain central. You'd think "people matter in our future plans" wouldn't be such a controversial statement.

Point 2. Stuff that's about tech culture, not AI

Here's where it gets interesting.

The tech industry's credibility bank is overdrawn. Years of grandiose claims, founder worship, and products marketed as world-changing solutions to problems nobody had—the public's appetite for this has evaporated.

Then there's the social media reckoning. We've collectively looked back at the past decade-plus of endless scrolling, algorithmic manipulation, and arguments with anonymous accounts, and concluded maybe this wasn't the communication revolution we were promised.

AI's misfortune? It arrived dressed in Silicon Valley's uniform just as the dress code became toxic. Timing matters.

When OpenAI unveiled Sora alongside a social video platform, public reaction wasn't applause for technological advancement. It was exhaustion: here comes another tool designed to capture and monetise our attention.

Point 3. Stuff that's about the economy and the world being… well… the world

This might be the most crucial factor.

Economic inequality has created a divergence where investment portfolios soar while paychecks stagnate and homeownership becomes fantasy. Large segments of the population feel economically stranded.

Now introduce AI, accompanied by fanfare about efficiency gains, productivity multipliers, and wealth creation for founders. For many, this reads as "the system that already doesn't work for me is about to accelerate."

AI becomes shorthand for "technological change that benefits others." That's not fundamentally an AI issue—it's AI inheriting decades of unresolved economic frustration.

Point 4. Good old-fashioned fear of the future

Baseline anxiety is elevated across the board.

Job security feels precarious. Political stability seems uncertain. Climate trajectories look concerning. Daily life carries an intensity that feels unsustainable.

Drop AI into this context, and it registers as yet another uncontrollable force reshaping life without permission. Edelman's research confirms this pattern: AI skeptics consistently describe feeling like the technology is being imposed rather than offered.

Trust naturally suffers under these conditions.

So what do we do with all this?

Here's the surprising part.

The majority of AI-anxious people haven't been harmed by the technology directly. Their concerns stem from perception rather than personal negative experiences.

Which means there's still room for a different trajectory.

Organisations need transparent communication about AI implementation—acknowledging genuine tradeoffs, not just benefits. Proper training programmes matter, not hastily assembled prompt-engineering sessions using outdated information.

The hype cycle needs disruption. Both utopian promises and apocalyptic warnings obscure reality. Stop suggesting that productivity improvements automatically translate to widespread prosperity—they historically haven't without intentional policy choices.

Most importantly, we need concrete, believable descriptions of how AI creates tangible improvements in regular people's lives. Not abstract talk about emerging industries, but specific, relatable scenarios.

Here's the interesting pattern: younger demographics aren't debating whether to engage with AI. They've accepted it as infrastructure for their future, regardless of their feelings about it, so they're building competency.

Everyone else faces a choice: develop understanding and agency,or watch from the margins.

To wrap up. AI's reputation crisis isn't a single problem—it's a combination of legitimate technology concerns, tech industry baggage, economic displacement anxiety, and general uncertainty about the future, with copyright issues sprinkled on top for good measure.

Thank you to Nathanielle Whittemore of the AI Breakdown podcast for his thoughts aired recently that have shaped the above views.

Until next week bye for now

AI Literacy Skills at Work: Safe, Ethical and Effective Use

AI Literacy Skills at Work: Safe, Ethical and Effective Use